I like big fog and I cannot lie

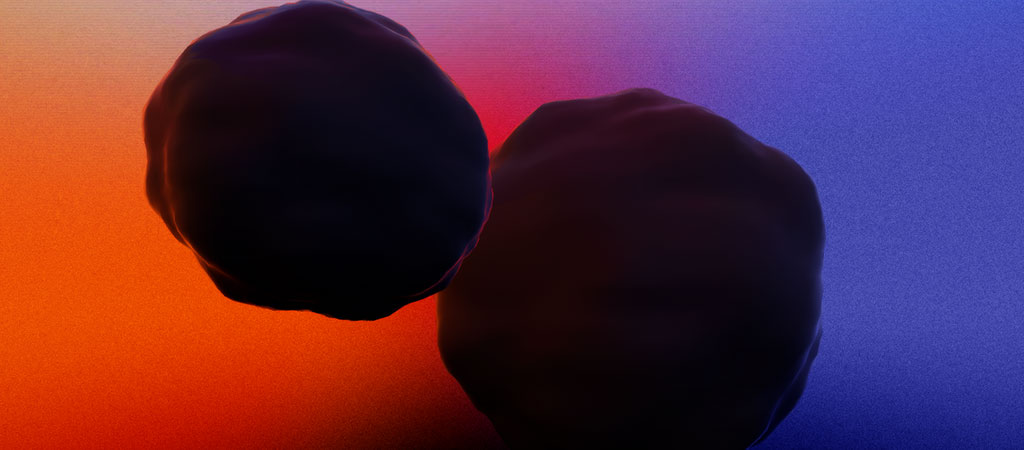

Fog is one of the best ways I've found to add a sense of depth to a scene. Check out the screenshots below - first without fog, then with:

In the first shot, it's hard to tell where the two objects are in relation to each other. The rendering engine (Threejs in this case) knows the larger one is much further away, but without any other point of reference, the perfect clarity of each objects' pixels makes it impossible for the player to know.

By adding fog, which in this case is just a blending of colors, we perceive the larger planetoid as being much further away. In real life this is accomplished by the atmosphere bouncing and scattering light as things move further away from us.

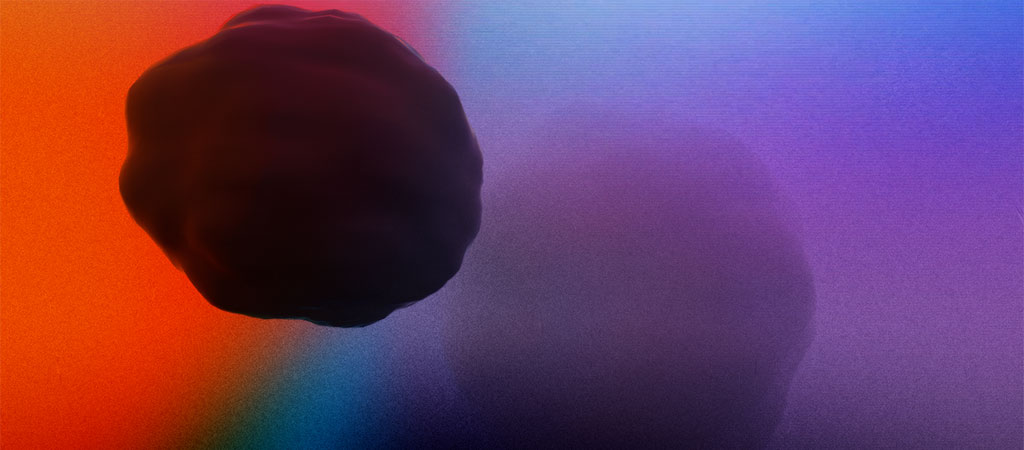

The problem I was running into is that, out of the box, Threejs supports fog comprised of a single color only. This works well enough if your background is a single solid color:

However, changing the scene background to anything but a solid color reveals the trick - the fog was just gradually making objects more cyan, not actually blending into the background. In this case I apply the background video texture from Always Here to the scene:

There's no way for Threejs to "know" what you consider the background. It can tell you how far away objects are from the camera and how "foggy" they should be, but beyond that it needs guidance.

The method I've used up to this point is to modify the shader chunk that Threejs uses to calculate the fog. It looks like this:

Basically - check if fog is enabled. If it is, calculate fogFactor depending on whether you use THREE.Fog() or THREE.FogExp2(). Then use fogFactor to mix the fragment color with fogColor.

How can we modify this to blend into the background? My idea was to add a new line after the color blending line that modifies the alpha value of the fragment, and to comment out the color blending line:

By using this with onBeforeCompile(), transparent fog can be applied to any material:

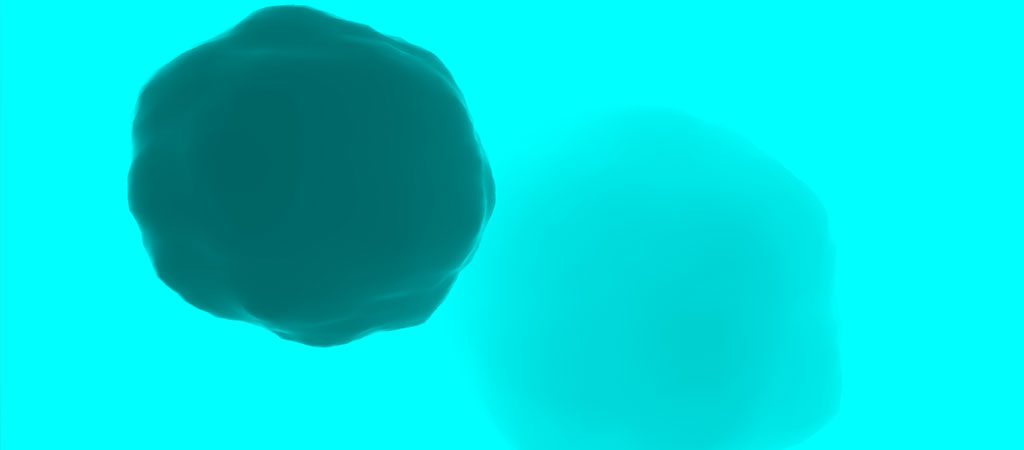

Much better - in fact, this seems to be exactly what we wanted. The more distant an object is, the more it fades into the background.

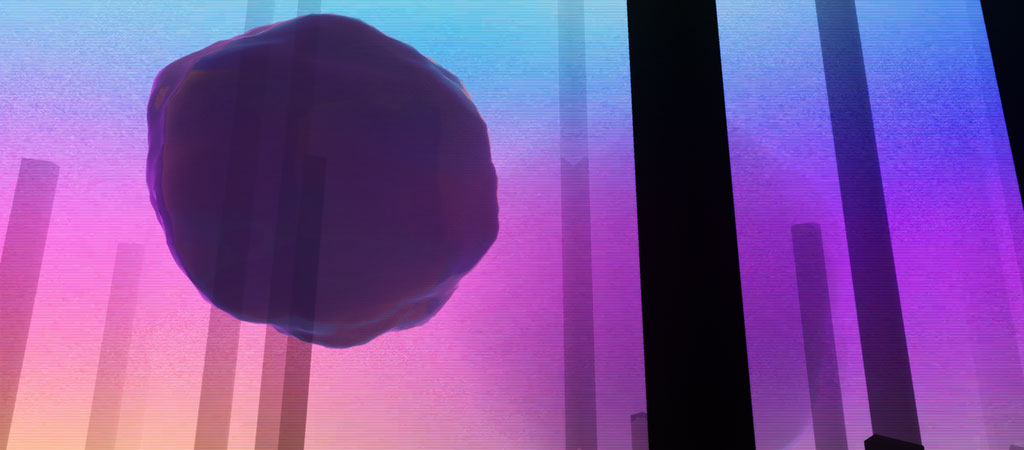

Except . . . what happens if we add more to the scene? Let's add some cosmic pillars:

Now we have issues. Three.js still doesn't know that we only want the video background to be considered the "background" — all it knows is that the further away an object is, the more transparent it should render.

As a result, the planetoid in the foreground looks more like a hologram, with the more distant pillars hazily visible behind it. The larger planetoid also shows some visual artifacts where the pillars intersect with it.

We need a way for Threejs to know that only certain objects should be blended with our "main" objects for the purposes of calculating fog. Here's the solution:

finally figured out how to create a fog that works with dynamic colored backgrounds without using transparency. I'm gonna write a lil blog post, but the short is to render the bg objects offscreen and then sample/blend the main objects with that. #webgl#threejs#IndieGameDevpic.twitter.com/jpGKRcMC9W

— A Number From the Ghost (@jittercub) July 16, 2024

The first step is to enable a new layer for any object besides the scene.background that you want to be considered part of the background. In my case, in addition to the background video, I have a huge sphere called envSphere encompassing the scene with a custom shader that produces shifting gradients of color:

Next, create a new THREE.RenderTarget() to store the pixels of your background objects.

In your render loop, before you render the main scene to the screen, capture the background layer. Do this by first setting the camera layer to your background layer and setting the renderer's target to the one you just created:

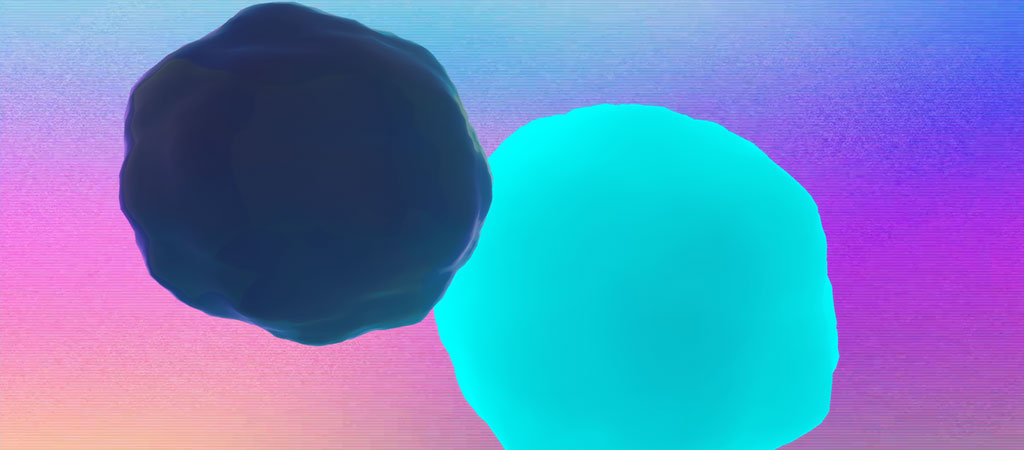

At this point we have all the information we need. We've stored a snapshot of what the backdrop looks like to an offscreen texture, and we've reset to render the main scene to the screen. Now we need to handle the actual blending in the fragment shader.

The basic idea — each fragment of an object that needs to have fog applied will be compared with the same location on the background texture. If the fragment is far enough away to trigger fog blending, we blend between the fragment's color and the color from that exact spot in the renderTarget.texture.

Essentialy, it works exactly the same as the default Threejs fog snippet I copied above — except instead of always blending with the same color (fogColor), each fragment gets its own custom color to blend with based on its position on the screen.

If you're impatient, here's the whole code. You call it like this:

Breaking it down a bit, first we copy the gl_Position from the vertex shader into a new varying variable for use in the fragment shader called vClipPosition.

Then, in the fragment shader, we calculate the "screen space" position of the fragment and convert to UV coordinates.

Finally, we sample the background texture at those UV coordinates and use the resulting color for our fade.

There are probably ways to optimize this, and there is certainly a performance cost for using a full-screen renderTarget. If you can think of any improvements, let me know!